Sharing our perspectives on timely topics

A roadmap to gender in language

Gender and language are inextricably linked, in various ways. Together with experts from the fields of linguistics, psychology and neurolinguistics, we tried to disentangle this topic. Follow us on a journey to discover the origins, present, and future of gender in languages.

Emmental and AI

AI innovations like the chatbot ChatGPT are enthusiastically celebrated and they trigger fears. Ethical discussions on AI should never revolve around the technology alone, says bioethicist Samia Hurst. Above all, it is important that the social, political and economic frameworks in which they are used are right.

Silicon brains

Valentina Borghesani is exploring how meaning is created in our brains. To do so, she works with aphasia patients, but increasingly also with AI language models that can be used to simulate processes in the brain. by Roger Nickl. © Celia Lazzarotto Lemon, sour, juicer: Which two of these three words do you think belong […]

Potent, but only moderately intelligent

How smart is ChatGPT really? Prof. Martin Volk and Prof. Paola Merlo, Researchers of the NCCR Evolving Language, are testing the chatbot and developing their own smart language models that are more efficient, greener, and fairer. by Roger Nickl. © Pixabay Since ChatGPT was launched by the American company Open AI in October last year, […]

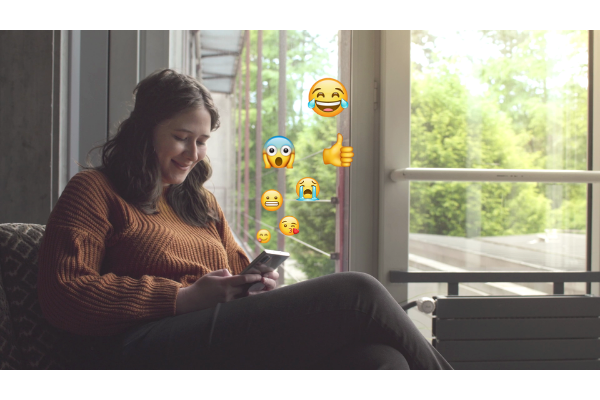

When our emotions go digital

Researchers from the University of Lausanne and the EPFL are calling on the Swiss population to annotate emojis. The study aims to unravel the way in which we share our emotions via instant messaging. This is an innovative research topic that is particularly interesting for the preservation of Switzerland’s linguistic heritage.

Marketing, money and technology: behind the scenes of the GPT-3

Over the past 6 months, GPT-3, a language model using deep learning to produce human-like text, has hit the headlines. Some of the articles had even been written by GPT-3 itself. Among other terms, the machine has been described as “stunning”, a “better writer than most humans” but also a bit “frightening”. From poetry to human-like conversation, its capacities appear infinite… but are they really? How does GPT-3 work and what does it say about the future of artificial intelligence?

Want more? discover below some papers "in a nutshell"

Our social interactions begin at a young age

Children demonstrate early in life social skills and a strong desire to interact with their peers. They engage in social interactions more often than our closest relatives, the great apes, says a study led by researchers from the University of California, San Diego and the University of Neuchâtel.

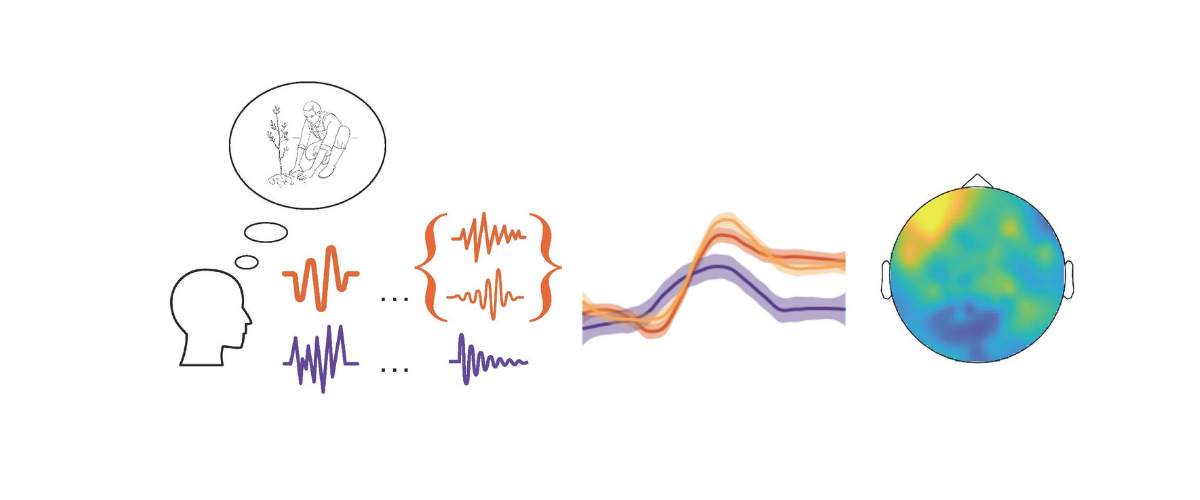

New EEG evidence on sentence production

Some languages require less neural activity than others. But these are not necessarily the ones we would imagine. In a study published today in the journal PLOS Biology, researchers at the University of Zurich have shown that languages that are often considered “easy” actually require an enormous amount of work from our brains.

Bonobos are sensitive to joint commitments

Bonobos, when abruptly interrupted in a social activity with another bonobo, resume it, as soon as the interruption is over, with the same partner.

Dwarf mongooses may combine units in order to create new meanings

Dwarf mongooses seem to produce a complex call that may be a combination of distinct call units.